The AI Safety Summit: regulating the talking shop

Nick France at Sectigo considers the purpose of the UK’s AI Safety Summit and explores the rise of AI deepfakes and the critical place of identity management in keeping people secure

As the UK hosts its first global AI safety summit at Bletchley Park on 1 and 2 November 2023, fierce debate has centred around the rise of LLMs such as ChatGPT and the copyright and data access associated with its use.

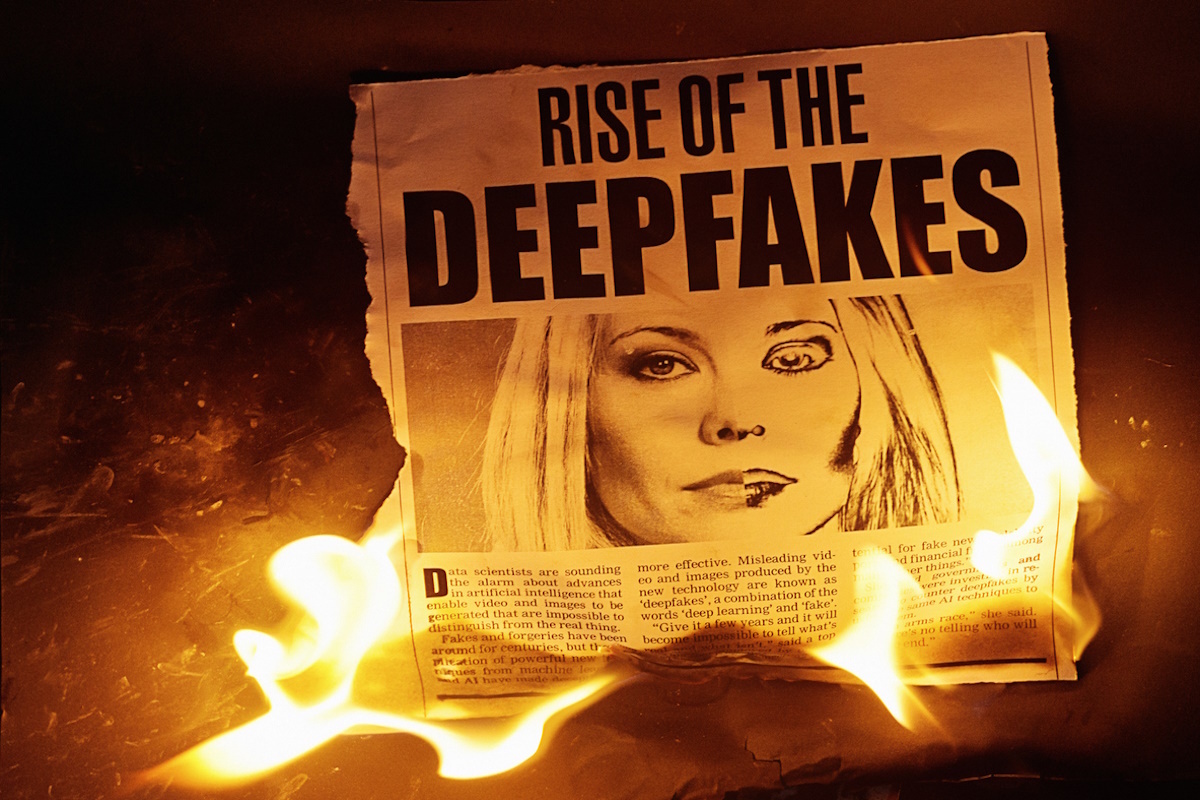

Within the hubbub is a clear oversight of the threat that Artificial Intelligence (AI) poses to the backbone of security - securing digital identities. The importance of this cannot be overstated. The ever-increasing sophistication and use of AI deepfakes now makes verifying the identity and authenticity of a device or individual almost impossible.

At the AI Safety Summit, privacy and security must be at the forefront. The longer we wait, the more adept hackers will become in using our own biometrics against us.

The rise of biometrics

As passwords have become less secure over time, we have turned to biometrics as an alternative form of authentication. The rise of facial recognition, fingerprint scanners, and other biometric technologies has been touted as a stronger, more convenient replacement for weak passwords and codes.

However, biometrics also have significant vulnerabilities. Physical attributes like faces, voices, and fingerprints can be spoofed, replicated, and manipulated using today’s technology.

This calls into question the security of relying on biometrics as a form of identity verification. While biometric authentication may seem a more secure form of verification, unfortunately, anything about your physical appearance that can be measured - eyes, face, voice - can easily be replicated. Our faces are everywhere and so using our features as methods for identity is a poor identifier because many people have access to this data.

The widespread availability of facial data has enabled the creation of increasingly convincing AI-generated deepfake videos. This has led to concerning uses such as synthesising footage of celebrities or public figures to spread misinformation, like the recent creation of deepfakes of Martin Lewis promoting fraudulent financial schemes.

The significant advancements in deepfake technology now enable the creation of entirely fabricated yet remarkably lifelike video and audio, rendering the former technology nearly obsolete. As deepfakes become more sophisticated, our reliance on facial recognition and other biometric authentication technologies needs to be re-evaluated. Unlike passwords, our faces, voices, gaits and other physical attributes cannot simply be changed.

This democratisation of technology means that the very biometrics we use for identification can be used against us. When it comes to identity, relying on anything physical that can be measured - from irises to facial structures to voices - provides a false sense of security.

Summits and regulations

The UK’s AI safety summit has brought together experts to align on the agreed risks of AI and how to mitigate them. However, privacy and security risks must be a key focus. Biometrics and facial recognition are now common in law enforcement and border control, often implemented without considering long-term limitations.

Recently, MPs called for a ban on live facial recognition (LFR) use by UK police over growing ethical concerns. The ethical dilemmas of implementing such AI technologies should be a summit focal point.

We must recognise the flaws in the identity management systems used in law enforcement today. The longer we wait to improve them, the more sophisticated hackers will get in exploiting weaknesses. It’s imperative we act now to address the vulnerabilities in how identities are managed and used before the technology proliferates further.

Towards a security-first approach

If digital security is to evolve at the pace of technological advancement, businesses must look to shore up smarter solutions than just biometrics. One of the most reliable ways to authenticate identity and evade AI-enabled fraud is through cryptographic methods like Public Key Infrastructure (PKI).

Rather than relying on vulnerable physical attributes, PKI uses digital certificates and public-private key pairs for identification and encryption. Even if the private key is somehow compromised, it can easily be revoked and replaced without changing any inherent physical characteristics. For all the advantages of biometrics, PKI provides a higher level of multi-factor security and integrity that can withstand AI threats of the future.

AI deepfakes make it trivial to fake videos and audio of public figures and everyday people alike. When faces and voices can no longer be trusted, we must look at better ways to validate authenticity and trace the origin of media.

Cryptographic authentication through PKI represents the kind of solution needed today to ensure security and trust in our increasingly digital world. Without it, we leave ourselves vulnerable to bad actors hijacking our biometrics through the very same artificial intelligence we hope to regulate.

The UK summit presents a pivotal opportunity to learn from past limitations and pave the way for a privacy and security-first approach.

A consideration for alternative spoof-proof encryption technologies must remain focal to the discussions for business security to stand a chance at keeping up with AI advancement. One of the best solutions that can evade the damage of AI deep fakes is PKI-based authentication, which does not rely on biometric data that can be spoofed or faked, by using public and private keys.

If the AI Safety Summit fails to address the major security threats AI poses to today’s encryption and identity verification systems, it will have failed to recognise the long-term fallout we could be facing with AI.

Nick France is CTO of SSL at Sectigo

Main image courtesy of iStockPhoto.com

Business Reporter Team

Most Viewed

23-29 Hendon Lane, London, N3 1RT

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2024, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543