The legalities of automation and how to avoid the pitfalls

James Crayton and Luke Jackson from Walker Morris discuss the potential legal implications of automation and how businesses should deal with them.

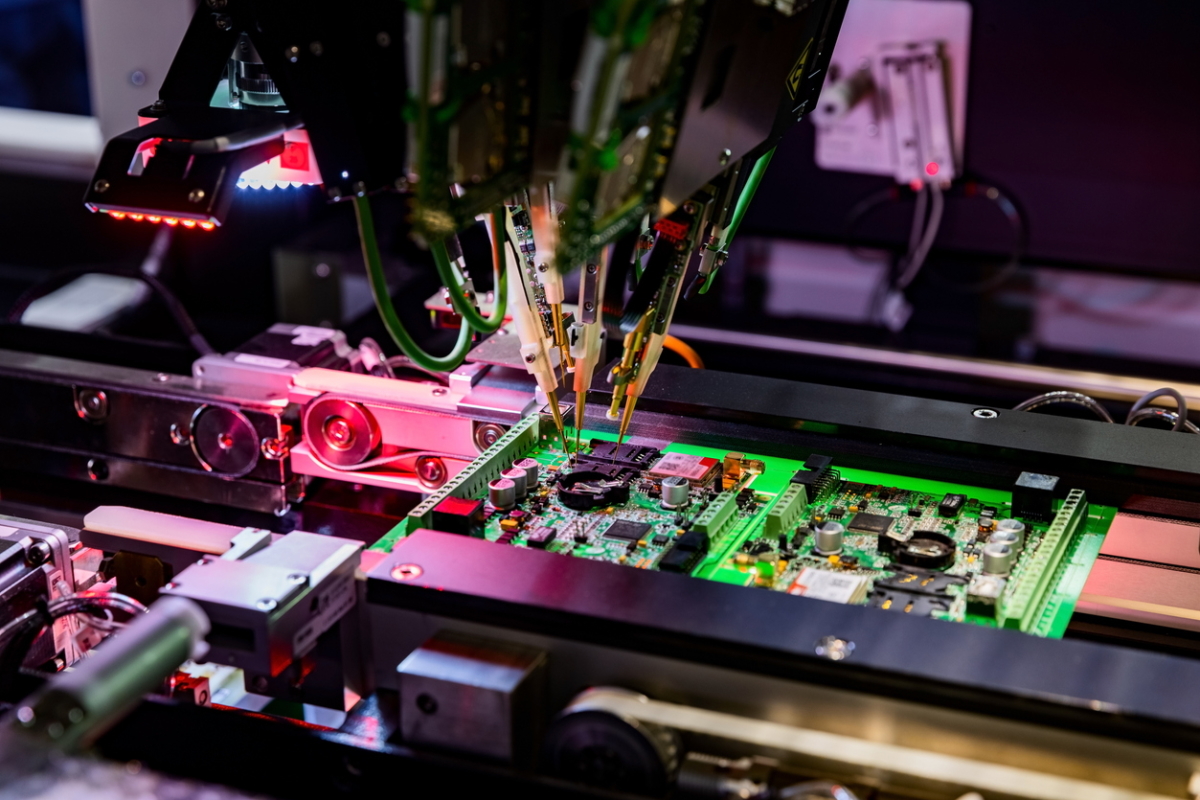

Transformative automation with leading-edge technology boosts business productivity, reduces lead times and delivers products and services more quickly. It also frees up staff to focus on other value-adding tasks.

However, as with any new tech, it has far-reaching legal implications that affect people and processes. So it’s vital that any organisation looking to use new robot tech understands its responsibilities and liabilities.

Although the “Factory of the Future” offers greater capacity, improved data collection and fewer errors in production, it will still hinge on the right foundations being laid and savvy, long-term decisions being nailed at the outset.

IP in AI – who owns the clever data?

Often associated with robotics and automation, artificial intelligence (commonly referred to as ‘AI’) in its simplest form covers the simulation of human intelligence in machines, which are programmed to think like real people.

The increased use of AI powered automation has data protection implications. A crucial, complex issue is who’s responsible for the protection of users and people’s stored details – the vendor supplying the AI or the client using the technology?

Where an automated system is capturing information about employees, suppliers, customers, etc. (e.g. through a camera, microphone or sensor), much of that is likely to be personal data. It is critical that the client user of the technology understands the extent of the data being captured and puts in place adequate protections to remain compliant with data protection law. Ignorance is not an acceptable excuse for non-compliance.

These measures are likely to include steps to ensure that personal data captured by automation isn’t passed back to their supplier without the permission of the people involved. Swingeing GDPR legislation on ‘data protection principles’ demands that personal information is held securely and used fairly, lawfully and transparently. Fines for breaches run into the tens of millions, so responsibility for this must be established upfront and known by all involved.

Expect the law’s relationship with AI to be a hot topic over the next 12 months. The Government recently published its proposed approach to regulating the technology in the UK and a full white paper is expected towards the end of 2022. Six core compliance principles are identified, as well as a requirement for legal accountability for an AI tool to rest with an identifiable person or company.

Licensing: full ownership or just a right to use?

Licensing is another key complex issue. While manufacturers may own the robot, the ‘AI-powering software’ is usually provided to them on a "licenced" basis. This means that while a customer has the right to use the technology, generally speaking this is only on specified terms and the supplier retains overall ownership of it.

The question of who owns the tech solution (or, more to the point, its outputs) is crucial. Customers often think they do, then run into an IP infringement claim when they try to sell it on or offer it as a service.

This is a common conflict of expectations. The manufacturer wants to pay for a unique innovation that will bring massive competitive advantage, not one that might also be offered to its competitors (even worse, a refined, improved version following its early adoption), while the vendor wishes to market it widely.

Understandably, few suppliers are willing to devise bespoke one-offs that they can only sell once – and the price for doing so will be exorbitant; far higher than an application that is used by many. Multi-user solutions can also mean that innovation across the user community is disseminated much quicker than with single-user systems.

It means there must be agreement on whether software will be developed, priced and supplied as an entirely custom solution and, if not, over what the core software is and what are the bespoke tweaks that the customer has the exclusivity on.

The bottom line is to ensure you understand the legal basis on which you are using a technology and how this is dealt with in the contract. Read the small print, ensure you know the terms exactly, how they will impact your business and what it’s responsible for/reliant on.

Health and Safety

Health and Safety is another key area of concern because deploying robots in a human workforce will naturally prompt safety implications and staff concern.

Control and mechanical errors can lead to faulty, prohibited, unexpected actions by a robot, which can cause death or serious injury. Operation by people who are unfamiliar with the systems and their safety protocols are also incredibly dangerous, while slapdash installation of the systems in the first place and power surges can also lead to accidents and electric shocks.

Recent changes to UK sentencing guidelines mean that penalties for serious health and safety breaches can exceed £10m in some cases. Businesses must take the advice of their own H&S advisers on these, record that they have done so and put appropriate policies and procedures in place.

It’s vital that any business with concerns about automation, or which doesn’t understand it fully, gets guidance and help quickly – whether it’s legal counsel, technical support or both - before problems arise.

Automation’s use is accelerating at a dizzying rate and, while it should be taken advantage of, businesses must know the dangers this tech brings and how to protect themselves and use it properly.

James Crayton is Head of Commercial and Luke Jackson is Director at law firm Walker Morris. Find out more about Walker Morris’ expertise and the ground-breaking automations powering industry worldwide: the factory of the future

Main image courtesy of iStockPhoto.com

Business Reporter Team

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2025, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543