American View: “Three Line Thelma’s” Lessons on Dangerously Oversimplifying Security Training

I hate to admit it, but personal computers screwed things up for everybody. Thriving in a non-security career nowadays requires being able to embrace two or more contradictory beliefs based on the same core idea and promote them all as absolutely necessary. Hardly surprising then that our non-technical users and stakeholders accuse us of being confusing, disingenuous, or just plain mad. That’s a perfectly logical conclusion to arrive at after we exhort them to follow different interpretations of the exact same rule, each seeming to indisputably contradict the others.

This is entirely our own fault. Learning science dictates that we must simplify how we explain complex subjects so our users can quickly understand, memorize, and implement critical security behaviours. That’s necessary because we frequently leave our users confused when we attempt to teach convoluted topics in their entirety. The sheer number of branching paths and twisted outcomes that must be factored to usefully understand a cybersecurity concept make perfect sense to us after years of study and application. A non-technical user, though, will quickly get overwhelmed by the messy details and tune out. Hence, the need to simplify our control protocols down to easily grasped instructions.

A great example of this is the aphorism we learned as children to “always lock the door.” We were taught that if we leave our home’s front or back door unlocked while we’re away, a burglar might take advantage of the opportunity to nick all our stuff. “Always lock the door” is easy to understand, easy to perform, and – most important – easily inculcated into an automatic, habitualized behaviour. Even now, as an adult, you check to ensure you locked the front door when leaving home. It’s consistent performance of a simple action to mitigate a specific risk.

Except … this physical security rule might work the other way around once you get to work. Say you work in retail, for example. Public-facing doors must be kept unlocked and unobstructed during business hours. Why? Because during emergencies – like a fire or active shooter threat – customers and staff must swiftly evacuate through the nearest exit. A locked door could get people killed. This leads to a parallel rule that contradicts the rule: “never lock the door.”

I know where your mind went: people are smart enough to apply situational rules based on context. Even a pre-teen working their first retail job can separate the rules for “always lock the external door at home” and “never lock the public doors at work.” It’s a minor complicating factor, easily remedied by a slight (but reasonable!) increase in complexity. No big deal, right?

Sure. I agree. The issue is that rules are much easier to memorize and habilitualize when there’s a personal motivation in-play. Locking the front door to your home protects your stuff from theft. Keeping the doors to the company’s facility open protects you as well as your customers from danger. Those rules embed because you’re protecting yourself in both cases. Enlightened self-interest is an excellent “hook” for teaching security controls.

The problem is that most security controls don’t have any sort of personal impact. It’s one thing to teach someone how to protect their personal records; it’s quite another to teach that same person how to protect their employer’s critical records. Your bank account info? Yeah, you can easily imagine how much life would sour if a bad guy stole it. That’s a natural emotional trigger that makes a person pay attention. But something as vague and impersonal as the company’s secret project files? While not impossible, it’s much more challenging to make an emotional connection between the impersonal concept of “the company” and the self of a normal user. Intellectually? Most people don’t harbour malice toward their employer. They understand they need their job to live and want to get through the workday with minimum drama. That’s a far cry from the natural fear of negative consequences that come from a threat to someone’s personal life.

This isn’t a personal or professional failing. People are hard-wired to prioritise threats to their own existence over threats that only affect others. The more abstract and impersonal a threat seems, the lower it falls in one’s personal calculus. Some employers attempt to mitigate this by threatening their employees with dire consequences if they don’t perform their security controls correctly. This method often backfires, since the impetus for the user shifts from performing the control meaningfully to only performing the steps required to escape blame if the control fails.

I first noticed this theory in action back in 1996. I was in the process of transferring from a base in Texas to an Army hospital in rural Alabama. I’d visited my new station to arrange billeting and flying back home. This required me to catch a puddle-jumper at a small, regional airport. The local strip had only one security gate and a handful of employees on duty to check in a half-dozen travellers. Busy day for them.

In true nerd form, I was traveling with my personal computer – an Apple PowerBook Duo subnotebook – in my carry-on. I sent my briefcase through the scanner. The X-ray tech running it say my computer and flagged my bag for further inspection. This was well after the destruction of Pan Am 103 over Lockerbie, so American airport security workers were worried about explosives being smuggled onto flights inside the shells of electronic devices.

The security staffer running the metal detector made me wait until all the other travellers had finished screening, then ordered me to remove my laptop from my bag and show her “three lines of text.” Her exact words. “I need to see three lines of text.” Thanks to that, I’m going to call her “Three Lines Thelma” from here on.

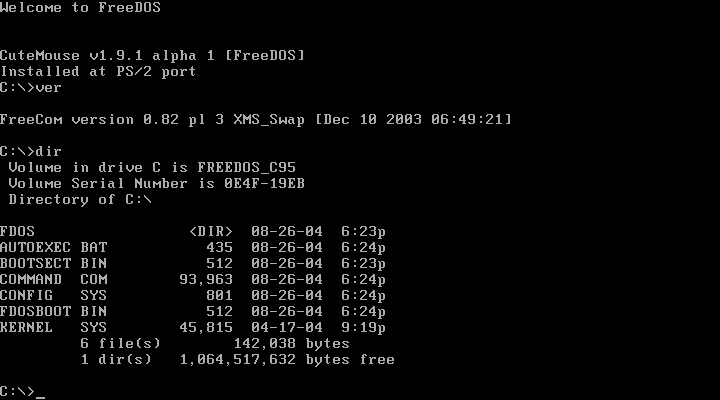

I understood what Thelma needed: she wanted me to power up my computer to “prove” that it was real and couldn’t, therefore, be stuffed full plastic explosives instead of computer guts. Sure. Fine. For travellers with a typical IBM PC (running DOS, Windows 3, or the painfully new Windows 95), their power-on sequence would start with a black screen, then show sequential lines of crude white text like this:

The problem was, my computer was a Macintosh: the king (in its day) of the Graphical User Interface. My machine didn’t boot up with a screen of white text on a black background like the more common PCs did. Mine also had a worn-out battery. I had to pull everything out of my bag, plug the AC adapter into a wall, then run the entire boot sequence until the desktop appeared. All the while, Thelma tech kept glaring at me for wasting her time.

Once I finally had my PowerBook booted, I asked Thelma if she was satisfied. She chanted “I need to see three lines of text.” I tried to explain that Macs boot differently than the PCs she was used to. This was obviously a working computer. Thelma dug in her heels and insisted “I need to see three lines of text!”

The only way to meet Three Line Thelma’s demands was to launch a word processing app … sloooooooooowly … then create a new, blank document, then type an entire paragraph of text while she watched. Minimum three lines worth, or else it’s not a computer! Once Thelma was finally satisfied, she let me shut my computer down, pack it up … and leave the secure area to visit the the ticket counter to get re-booked … because by the time we’d finished I’d missed my flight.

I knew what had happened: Miss Thelma knew nothing about computers (completely normal considering the era). Thelma’s bosses must have laid down a rule that all computers being carried onto airplanes must be inspected to “prove” that they were real and not plastic shells crammed full of explody stuff. That was a fine rule; one I’m still in favour of.

That said, trying to teach basic computer use to non-technical people could be challenging. To mitigate this problem, the process designers had likely settled on a simple, easy to memorize aphorism: “observe at least three lines of text.” It was a good enough rule given the mainstream technology of the day. The trouble was Our Thelma didn’t understand computers enough to recognize or adapt when her rubric didn’t fit. By simplifying their instructions well below the threshold where education was required, the process designers’ rule became a counterproductive hindrance instead of an effective security control.

More importantly, Three Line Thelma didn’t seem personally motivated to learn anything about computers. She not only displayed zero interest in why my strange machine didn’t act like the PCs she’d trained on; she voiced resentment and frustration at my machine not functioning as she’d expected. I was burdening her by not bringing gear she could recognize to the airport!

Part of this resistance to learning was exacerbated by the reason Thelma was probably given as to why the tiresome rule had been implemented. The control Thelma had been ordered to mitigate – explosives carried onto a commercial airplane – wouldn’t affect her personally. She was staying at the airport; if she screwed up, there would be an enquiry … but so long as Thelma had rigidly followed her instructions – “I need to see three lines of text.” – she couldn’t be held responsible for the resulting disaster. She could state with pride that she’d performed her job exactly as-directed; someone else could get stuck with the blame.

I appreciate that this is a silly example. Still, I’m sure you get my point: yes, simplifying control language is often necessary for helping non-technical employees understand, remember, and habitualize cybersecurity control tasks. I’m all for it. Clear, precise, and memorable language is crucial for delivering effective security training.

That said, oversimplification can backfire. When you attempt to apply one universal process to a control that changes – or even reverses! – based on changes in context (as per the door locking example from earlier), it’s easy for users to get confused and vapour lock. Control tasks that change based on context must be taught in terms of easily differentiated contexts.

Additionally, security controls must be taught with some sort of emotional component, so the user has at least some personal investment in the control’s successful execution. Trainers must prompt enough of an empathetic reaction that the students are willing to internalize reasons to want to comply. Just saying “do it or you’ll be fired” will always fall flat, especially in a business culture where compliance and enforcement are inconsistent (which is to say, in most organisations).

Ignore these warnings at your peril. Oversimplifying security control instructions might not make you miss a flight … anymore … but it might well lead to ransomware spreading like wildfire across your network because some non-technical, emotionally disengaged user did exactly what you taught them to do without regard to the context… and not what they should have done given the complicated nature of the threat.

Keil Hubert

You may also like

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2025, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543