Why generative AI hasn’t replaced search

Sponsored by CoveoGenerative AI and search need each other to ensure accuracy, relevance and up-to-date enterprise knowledge.

When ChatGPT and other generative AI technologies first appeared, many questioned whether they would make traditional search obsolete. Generative AI, powered by large language models (LLMs), has transformed information access, making it easier to engage in conversational queries and receive human-like responses. However, traditional search hasn’t disappeared and remains as crucial as ever. Why has generative AI not replaced search, and what role does search play today?

Generative AI’s limitations

While they were initially promising, LLMs have some significant limitations that impact their reliability. One primary issue is “hallucination”, where LLMs confidently produce responses that are factually incorrect. LLMs excel at generating text that appears accurate, but they lack mechanisms to verify the truth of their output.

Another limitation is the relevancy and freshness of responses. While LLMs are trained on vast datasets, their knowledge is capped by their training data’s cut-off date, leading to responses that may be outdated or potentially biased. Furthermore, the training data for tools such as ChatGPT is often publicly available information, which may not comply with certain privacy laws and regulations, such as GDPR.

Challenges of enterprise data

A significant challenge is that generative AI does not have access to proprietary enterprise data unless it’s specifically integrated. Companies constantly produce new information, and updating LLMs with the latest internal data is costly and time-consuming. Enterprises need up-to-date information for customer interactions and employee support, which is often too complex to achieve by solely relying on generative AI.

The role of search in generative AI

For generative AI to be effective in business, it requires access to accurate, relevant and real-time information – making search a vital component. Search has become essential because companies need control over the data AI draws from to ensure responses are accurate.

While enterprises want to leverage generative AI, they frequently face challenges in the retrieval of relevant data, especially given issues such as outdated content and fragmented sources. Retrieval-augmented generation (RAG) has emerged as an effective solution to these challenges, combining search with generative AI to improve response relevance and accuracy. By linking a retrieval system with an LLM, RAG enables access to real-time, reliable data, reducing the risk of hallucinated responses and ensuring accuracy.

How RAG improves generative AI responses

With a RAG approach, the generative model relies on a “source of truth” provided by the enterprise. A search system identifies and pulls the most relevant data from sources such as knowledge bases and delivers it to the LLM, which then produces responses grounded in this verified information.

Search’s role in RAG provides multiple advantages, such as:

- Improved accuracy and relevance: ensures responses are accurate and tailored to specific queries

- Reduced hallucination: by grounding responses in real data, AI models are less likely to produce incorrect answers

- Timely, cited information: responses are based on current data, and sources can be cited

- Secure content access: safeguards confidential or proprietary information

- Cost efficiency: eliminates the need for continuous retraining of LLMs

This search infrastructure can serve multiple generative AI applications – such as chatbots, customer support agents and digital assistants – enabling them to pull from a unified knowledge base without needing to replicate data across platforms. This approach enhances efficiency, security and accuracy for enterprise applications.

Generative AI: a complement, not a replacement for search

The advent of generative AI marked a new phase in human-AI communication. Yet, while generative models have impressive language capabilities, they are not substitutes for search when it comes to knowledge retrieval. Search remains essential to provide timely and accurate information that generative AI can draw upon to produce meaningful responses.

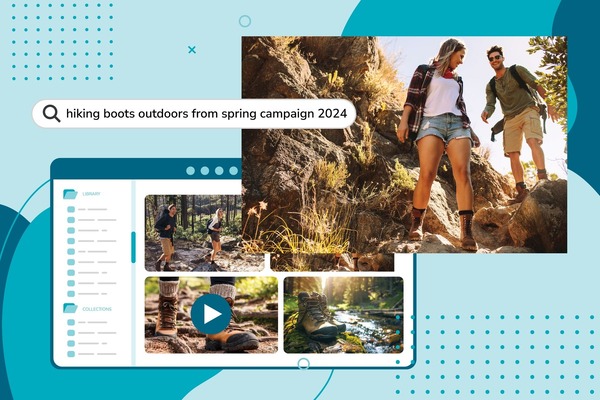

Instead of replacing search, generative AI has heightened its importance, making search a cornerstone in the enterprise AI toolkit. As generative AI continues to evolve, search will expand alongside it, enabling information retrieval across various formats, including text, images, video and audio.

To see how a robust search foundation can enhance your AI strategy, connect with Coveo and discover how quality retrieval can improve your enterprise’s success with generative AI.

by Sébastien Paquet is VP of Machine Learning at Coveo

Sébastien Paquet is VP of Machine Learning at Coveo. He earned his PhD in Artificial Intelligence from Laval University in 2006 and has extensive experience in research and development, particularly in military intelligence and knowledge management. Since 2014, he has led Coveo’s machine learning team, focusing on enhancing information retrieval, recommendations and personalisation. His current work includes large-scale data analysis and natural language processing.

Business Reporter Team

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2024, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543