Data management in the age of AI

Charles Southwood at Denodo argues that, with AI on the rise, it’s time for businesses to enhance their data strategies

As we step into a new year, the persistent presence of AI continues to shape our discussions. The rapid advancements of generative AI, coupled with its expanding integration, is placing heightened demand on analytics and IT leaders in particular.

Businesses venturing into generative AI and large language models (LLMs) – our recent research found that about 51% of UK organisations fall into that category – encounter challenges when it comes to data quality, ethical compliance and cost management.

This underscores the pivotal role of robust data management. Without a strong foundation in data management, even the most advanced AI projects run the risk of producing unreliable, low quality or non-compliant results.

Many businesses have already implemented data management strategies; it’s something that has become increasingly essential throughout the ongoing digital transformation of business. However, in the realm of data management, the unprecedented volume and disparate nature of data generated by AI necessitates a complete paradigm shift.

A new approach to data management

Traditional methods of data management, such as batch-loading data into warehouses, are proving inadequate for the requirements of real-time insights. Persevering with these methods while attempting to implement AI will only impede organisational progress.

Organisations that proactively invest in an up-to-date, strong data management framework will be better positioned to unlock the full business potential of generative AI, LLMs and other advanced technologies.

We’re already seeing new and innovative ways to overcome the shortcomings of traditional data management methods. Enterprises are investing in architectures like data mesh and delivering data literacy programmes to facilitate better data-driven decision-making.

This last point is key; the complexity of data management in an AI-based business strategy will require a learning curve. Employees and users who are less tech-savvy will need support and enablement to get up to speed if businesses are to benefit fully from the potential of AI.

Integrating AI to democratise data

Data management vendors are helping businesses address complexity by embedding their data management solutions with AI capabilities for natural language queries, supported by LLMs. This allows business users with no knowledge of SQL or data storage formats to self-serve and securely access the data they need.

Similarly, AI modeling can be enabled to look at previous user patterns of data consumption and make recommendations to business users on other data sources that may be useful. E.g. “Other users who looked at these data sets also found these other ones useful”.

This can be truly transformational in uncovering new data insights for business users as well as advanced analysts and data scientists. It all contributes to improving access to relevant data right across the business and will ultimately help businesses deliver better services to their end customers.

The ethical challenges of AI and data management

Undoubtedly there are other challenges involved with AI, primarily around ethical risks. While GenAI and LLMs have the potential to bring huge efficiencies to businesses, there could be unexpected or unintended consequences that need mitigating. Two aspects that need to be considered in ensuring AI use is ethical, robust, and accurate in its output are:

1. Regulations: Given the global nature of many organisations, regulatory standards – whether those imposed by governments or adopted by sectors voluntarily – need to be set at both national and global levels, with compliance and regulatory guidelines provided. This is crucial in helping businesses and their employees to maintain ethical use of AI. While regulations are still evolving, companies must monitor the data they’re using to avoid bias, inaccuracies, or copyright infringement in AI model outputs.

2. Access: The broadest possible access to a range of data sources during AI learning is essential to preventing unintended consequences or assumptions from being embedded in the AI models. The greater the volume and breadth of data sources in a GenAI learning model, the more robust the capability. Consequently, data virtualisation platforms for seamless data integration and delivery are emerging as indispensable components of AI architectures.

The future of AI

The appetite for real-time data insight for both informational and operational data consumers is what will shape the evolution of AI going forward. As we move through 2024 and beyond, businesses need to get their data strategies aligned with the realities of AI, overhauling data management methods to democratise data and ensure ethical use and output.

We’ve come a long way from the early chatbot implementations on websites, and there are many more exciting advances to come. The future belongs to those who take proactive steps in data management today.

Charles Southwood is Regional Vice President, Northern Europe at Denodo

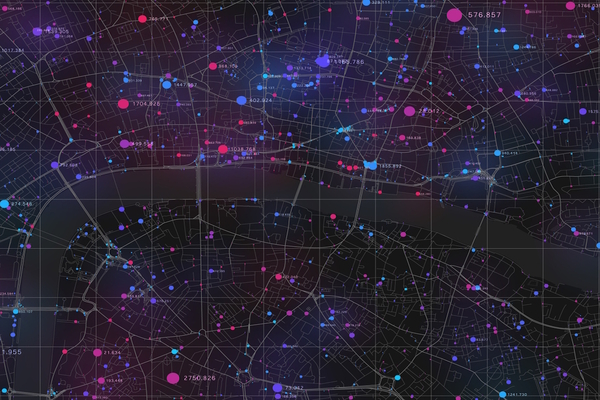

Main image courtesy of iStockPhoto.com

Business Reporter Team

Related Articles

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2024, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543