Faster server speeds are on the horizon

Sponsored by SiemonWith emerging technologies driving increased network speeds in the data centre, switch-to-server connection speeds are faster than ever. Ryan Harris of Siemon explains why short-reach, high-speed cable assemblies could help maximise your budget on next-generation server systems.

Artificial intelligence, machine learning, edge computing and other emerging technologies have been widely adopted in enterprise businesses. This drives the need for data centre server systems to deliver greater speeds in support of these applications. Today’s 10 and 25 Gb/s server speeds are good enough for mobile applications with lots of requests per second and lend enough bandwidth to support high-resolution video content. But text-based generative AI, trained edge inferencing and machine learning are already in need of 100 Gb/s and beyond.

The latest developments in artificial intelligence, however, have added an entirely new level to network bandwidth and speed expectations, with graphical processing units (GPUs) empowering server systems to analyse and train video models in just a couple of weeks of processing time. This is made possible with server connectivity speeds anywhere from 200 Gb/s all the way to 400Gb/s, and with 800Gb/s server speeds on the horizon.

Data centre network engineers are tasked with finding a balance of cost and performance to support the different needs of their organisations. While there is a range of cabling options available to enable faster switch-to-server connections in the data centre, short-reach, high-speed cable assemblies can help maximise your data centre budget. They support high-speed connectivity but also provide better power efficiencies and the lower-latency data transmission required for emerging applications.

Rapid deployment enables agility

High-speed point-to-point cable assemblies are typically deployed in large and small colocations distributed on the edge, and in on-premises data centres that use top-of-rack (ToR) or end-of-row (EoR) designs.

An EoR topology has a large network switch at the end of the row, with many connection points to several server cabinets in the row. EoR uses fewer access layer switches, making it easier for network engineers to deploy system updates, but it’s more complex to deploy and takes up more space. This cabling approach typically requires cable management and expensive transceiver modules used for longer reaches that are more power-hungry than short-reach point-to-point cables.

A top-of-rack design, on the other hand, enables rapid deployments in both large and small spaces. In ToR design, the network switch resides inside the cabinet and connects to servers within that cabinet, making it an ideal option for easy data centre expansion. Cable management and troubleshooting are simplified too, but the drawback lies in the number of switches that need to be managed.

High-speed server connections are typically available as direct attach copper cables (DAC), active optical cables (AOC), or transceiver assemblies. Different cord options can support transmission speeds from 10Gb/s all the way to 800Gb/s, which means data centre facilities are well equipped when network equipment must be upgraded.

With the increased power consumption of new GPUs pushing servers further apart, new cooling methods are being adopted to ensure server system density remains at rack scale. In addition to advanced cooling technologies, Nvidia, a leading chip manufacturer, recently presented its new Blackwell GPUs being used in its GB200 NVL72 rack scale design at GTC 2024. As AI GPUs and server designs become increasingly dense, their footprint becomes smaller, making ToR rack-scale server systems capable of supporting today’s needs as well as potential future needs, such as video training or video inferencing.

However, there are still super-scale server systems being deployed using EoR because of power and infrastructure limitations. When there is uncertainty of how much computing power will be needed, deploying scalable ToR systems and using a hybrid cloud approach helps balance resources so there are no on-premises server systems sitting idle.

Not all cables are the same

Let’s take a closer look at the three cabling options used for server connectivity. Direct attached cables are the most suitable option to make in-rack connections. DACs feature virtually zero power consumption (only 0.01W to 0.05W), supporting high-density server connections in the data centre while also offering low latency and the lowest costs. Inner cabinet server connections can be made from top to bottom with only three metres of cabling. While passive DAC jumper cables lack active chips, limiting their reach at higher speeds, active copper cables are emerging as a solution. These active cables offer both longer lengths and smaller diameters, extending the reach of short-reach copper for server connections well into the future.

Active optical cables (AOCs) support longer lengths at higher speeds and smaller cable diameters for links up to 30 metres. AOCs using low-power multimode short-reach optics are more expensive than DACs, however. The small cable diameter is ideal for higher-density inner cabinet breakout connections in ToR and can simplify switch-to-switch aggregation connections located several cabinets away. The point-to-point cable assembly design makes rapid deployments easy.

Transceivers using structured fibre cabling can be used to cover lengths of up to 100 meters, for example when connecting multiple rows. Although the transceivers are the most expensive of the three options, transceivers using fibre feature a small cable diameter, and the existing cabling infrastructure can be re-used.

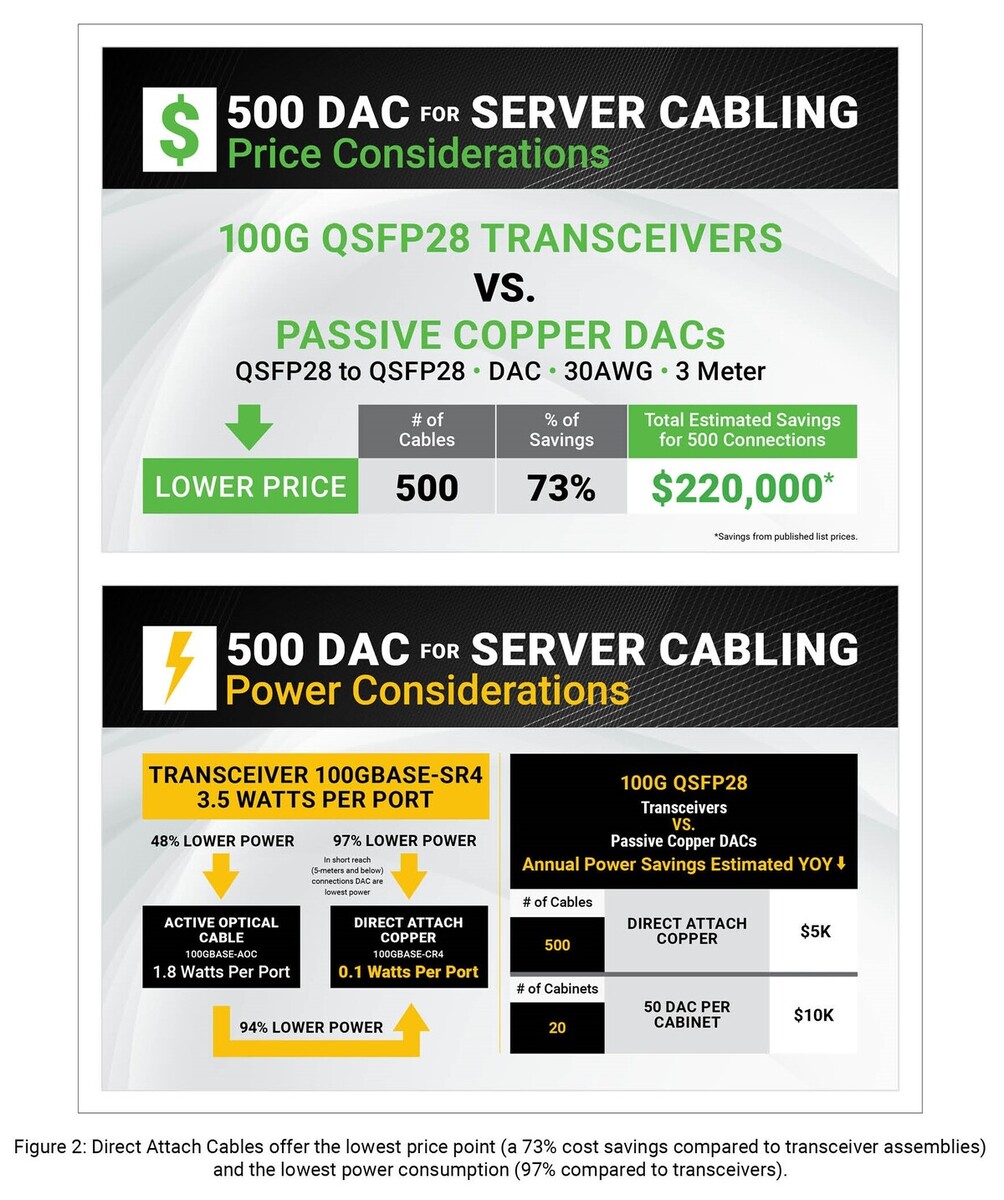

The following charts provide a comparison of purchasing and power costs based on 500 server connections using 100G (25G per lane) DAC cables. These numbers multiply quickly in volume switch to server connections. As speeds increase, so do the price and the power consumption of the active chips. Price and power budgets make cabling an important decision when planning a deployment strategy to support next-generation server systems.

There are several solutions available for connecting servers on the edge and in the data centre, which makes forward-looking planning decisions more complex than ever before. Following industry-standard best practices is a safe bet. In an era of great change, being nimble has great advantages to stay up to speed. When it comes to selecting cable assemblies for next-generation network topologies, network managers can benefit from an agile deployment model that uses point-to-point high-speed cable assemblies in ToR server systems. Working with a trusted cable assembly manufacturer can help in making an informed decision when reviewing all the options.

For more information, visit siemon.com

Business Reporter Team

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2025, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543