The EU AI Act: a new global standard for artificial intelligence

Are you compliant?

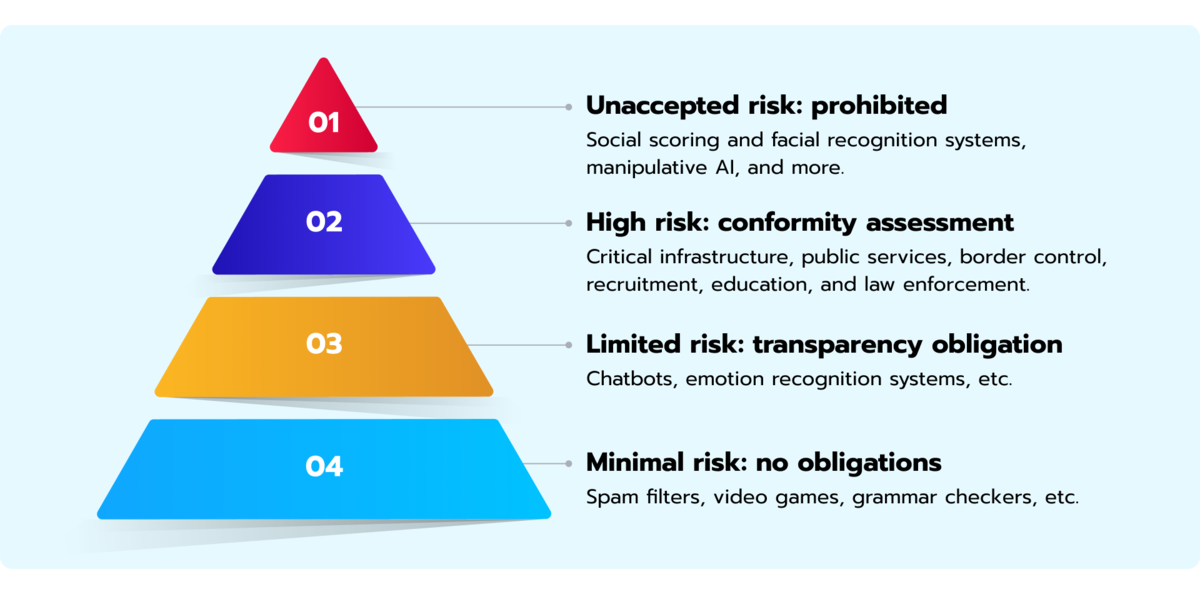

On 1 August 2024, the European Union (EU) Artificial Intelligence (AI) Act, one of the first regulations of AI, went into effect. The AI Act sketches the prohibited AI practices and classifies AI systems based on the risk they inflict on society. It sets a range of penalties for non-compliant organisations: fines can reach up to €35 million or 7 per cent of the entity’s total worldwide annual turnover for the past financial year.

While these are the early days of the AI Act’s implementation, its potential impact on the tech industry and society is already a subject of intense debate. In this article, we’ll look at the possible implications the EU AI Act brings to businesses and how to prepare for the challenges and opportunities it presents.

Definition of the EU AI Act

The AI Act is a law that regulates the use and development of AI systems within the EU. It oversees the actions of numerous actors within the AI value chain, namely providers, deployers, importers, distributors, product manufacturers and authorised representatives. While each of these operators are subject to different degrees of obligations, providers (developers) of high-risk AI systems are those who face major regulatory control. Importantly, the AI Act puts guardrails on providers and deployers of AI if their models or the outputs of their models are used in the EU, regardless of whether they are based there.

Significance of the EU AI Act for global AI regulation

As mentioned, the EU AI Act represents one of the world’s first legal frameworks for AI that strives to balance innovation with safety and ethical considerations. The first draft was created in 2021, but the arduous process of its improvement continued until 2024. The AI Act bears some resemblance to the European General Data Protection Regulation (GDPR), which has encouraged governments across the globe to implement similar legislation on a domestic level. On a large scale, the EU AI Act sets a precedent for other jurisdictions to follow.

Impact of the EU AI Act on the tech industry

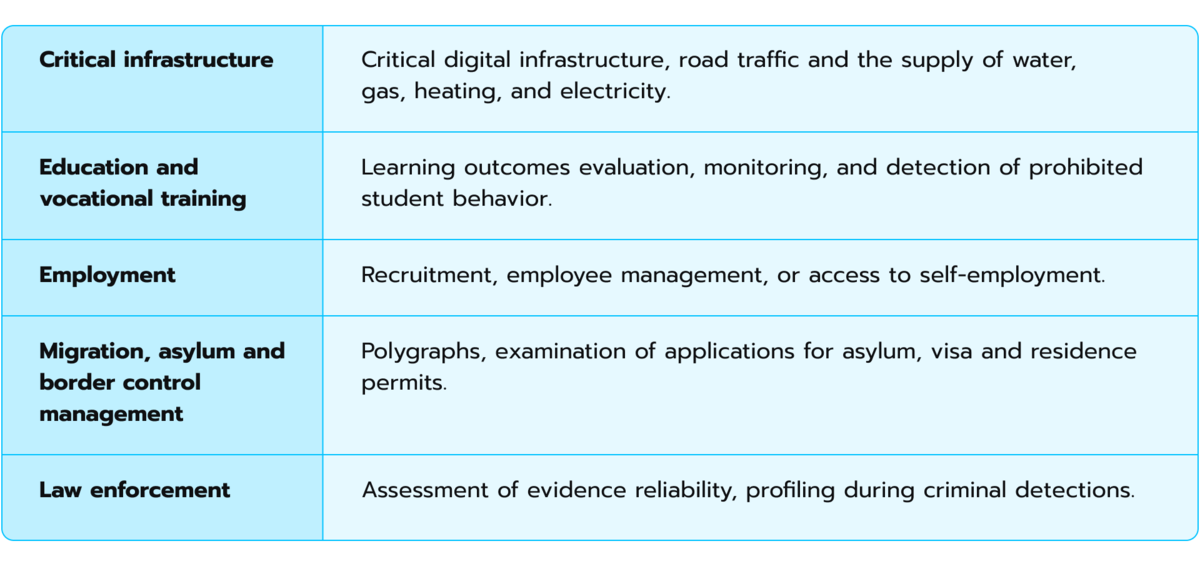

The AI Act will directly affect numerous companies that develop and/or use AI systems in the EU. High-risk AI systems, such as those for critical infrastructure and law enforcement, will face the most significant impact (see Fig.1). These systems will require extensive risk assessments, data governance measures and continuous human oversight. Even for lower-risk AI applications, the AI Act introduces new transparency and accountability obligations. In every case where a significant risk is involved, companies will need to provide clear information about the AI systems they use.

The new legislation imposes important obligations on large technology companies. But startups, as well as small and medium-sized enterprises (SMEs), will also need to make adjustments. Although regulatory sandboxes may offer potential support to them, complying with the AI Act’s requirements will still demand substantial effort. On a side note, the law does not extend to personal AI use or AI applications in scientific research.

AI models with systemic risk

The AI Act explicitly outlines several prohibited AI practices that are unacceptable in certain circumstances. These include:

- Deceptive AI systems that distort behaviour, manipulate decision-making and exploit a person’s vulnerabilities (age, disability or other attributes)

- Biometric categorisation systems (exceptions are present)

- Social scoring systems

- Emotion-recognition systems used in the workplace or educational institutions (exceptions are present)

- Systems that estimate whether a person is likely to commit a crime (exceptions are present)

- Systems that scrape facial images for facial recognition databases

- Remote biometric identification (RBI) systems that are accessible in public spaces and are used for law enforcement purposes (exceptions are present)

The prohibition against these systems and practices is designed to prevent fundamental human rights violations and prevent considerable societal harm.

Compliance requirements for high-risk AI systems

The EU AI Act categorises AI systems based on their level of risk, with the most stringent regulations imposed on high-risk systems. These systems are typically those that pose a great threat to people’s health, safety or fundamental rights.

Here are some examples of high-risk AI systems:

AI systems that profile people are categorised as high-risk under the EU AI Act. Companies that develop or deploy AI models must conduct a thorough risk assessment to determine if their system falls into this category. If an organisation concludes that their AI system does not pose a high risk, they must clearly articulate this assessment prior to the system’s market release. For AI systems identified as high risk, the AI Act imposes specific obligations on providers. These are the steps providers of high-risk systems must take:

- Set up a risk-management system

- Use data governance

- Prepare technical documentation

- Keep records of events throughout the system’s lifecycle

- Share instructions with downstream deployers

- Guarantee human oversights

- Prioritize accuracy, robustness and cyber-security

- Lean on a quality management system

General purpose AI (GPAI)

General purpose AI (GPAI) encompasses AI models that can perform a wide range of tasks. They are typically trained on massive datasets with advanced techniques such as self-supervision. Large language models (LLMs) such as GPT-4o or image generation models such as Midjourney are examples of GPAI. GPAI providers are required to share technical documentation, offer clear user guidance, adhere strictly to copyright regulations and transparently disclose the nature of data employed in model training.

Providers of GPAI models released under free and open licences are exempt from technical documentation and user instruction requirements but must still adhere to copyright laws and disclose training data summaries. At the same time, if such models are deemed to pose systemic unacceptable risk, additional obligations will apply.

Interestingly enough, a GPAI can be a component of a larger system that is classified as high risk under the EU AI Act. For instance, a GPAI model might be integrated into a medical device, which makes the entire device a high-risk system subject to rigid regulations. A GPAI itself can be classified as a high-risk system if it meets the criteria outlined in the AI Act.

Timeline for the implementation of the EU AI Act

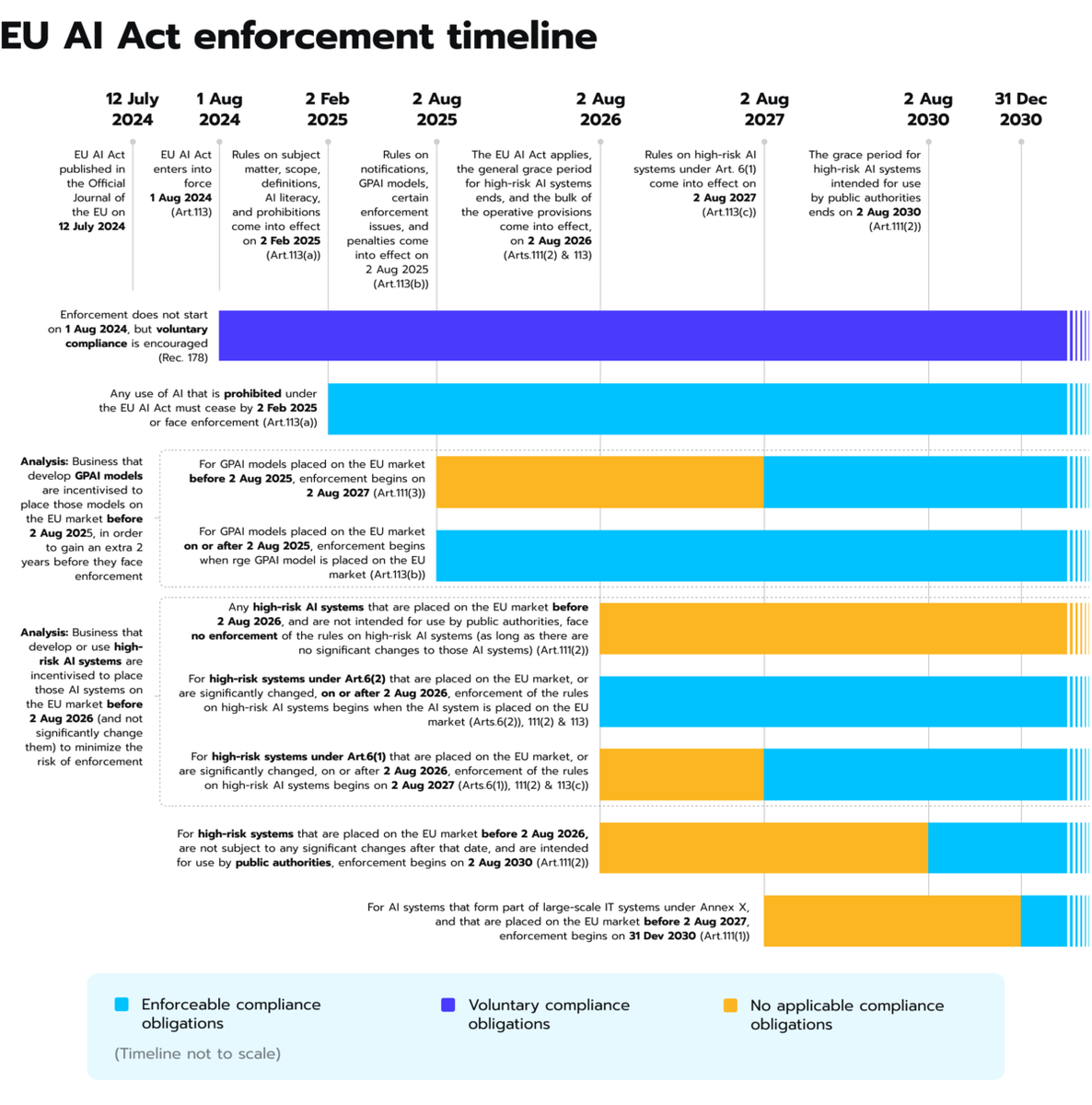

- Prohibited AI systems will be subject to the Act six months after its official implementation

- General purpose AI systems will fall under the Act’s regulations one year after its enactment

- High-risk AI systems categorised in Annex III will be required to comply within two years of the act’s effective date

- High-risk AI systems outlined in Annex I will have three years to adapt to the new regulations

Figure 2. Most requirements under the EU AI Act will become enforceable starting 2 August 2026. Image courtesy of White & Case LLP International Law Firm

Learn how responsible AI can transform your organization: contact our experts.

Olena Domanska PhD is a data science engineering manager at Avenga, an Assistant Professor at the Ivan Franko National University of Lviv, Ukraine, and currently a visitor researcher at the University of Manchester. As a professional with more than 10 years of experience, she has been leading a passionate team of AI and data science experts who translate real-world business challenges into cutting-edge AI solutions across various industries, especially tech insurance, finance and pharma.

Business Reporter Team

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2025, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543