Keeping AI biases out of digital customer journeys

Olivier Koch at Onfido explains how reducing bias in artificial intelligence systems will improve customers’ digital experience

In just a few decades, artificial intelligence (AI) has become a major part of our daily lives. Hundreds of millions of people use services like ChatGPT to write emails or Midjourney to create new visuals. AI is accelerating the arrival of a new digital era, enabling us to work faster and more efficiently, to meet creative or professional challenges, and to achieve new innovations.

However, the use of artificial intelligence does not stop there. AI is now an integral part of critical services for the smooth functioning of our society: loan agreement or access to higher education services, access to mobility platforms, and, in the near future, access to medical care. Online identity verification, for its part, has expanded from the creation of a bank account to many uses on the internet.

AI systems, however, can behave in a biased manner towards end-users. In just the last two months, Uber Eats and Google have discovered how much the use of AI can threaten the legitimacy and reputation of their online services.

However, humans are also vulnerable to biases. These can be systemic, as shown by the bias in facial recognition—the tendency to better recognise members of one’s own ethnic group (OGB, or Own Group Bias), a phenomenon now well documented.

This is where the challenge lies. Online services have become the backbone of the economy: 60% of people access online services more today than before the pandemic. With lower processing costs and shorter execution times, AI is a solution of choice for businesses in handling an ever-increasing volume of customers.

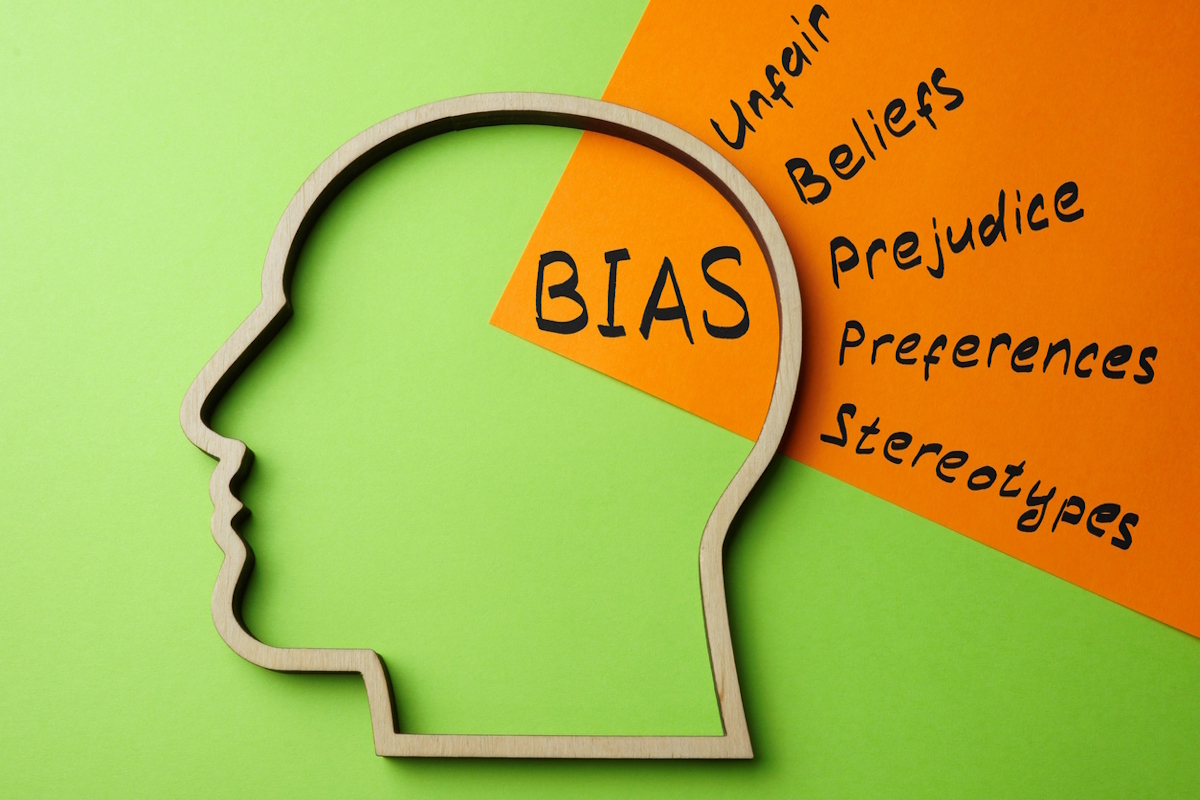

However, despite all the advantages that this solution offers, it is important to be aware of the biases it presents and the responsibility of companies to put in place the right safeguards to protect against lasting damage to their reputation and the economy as a whole.

At the heart of a bias prevention strategy are four essential pillars: identifying and measuring bias, raising awareness of hidden variables and hasty conclusions, designing rigorous training methods, and adapting the solution to the use case.

Pillar 1: identify and measure bias

The fight against bias begins with the establishment of robust processes for its measurement. AI biases are often weak, hidden in vast mountains of data and observable only after the separation of several correlated variables.

It is therefore crucial for companies using AI to establish good practices such as measurement by confidence interval, the use of datasets of appropriate size and variety, and the employment of appropriate statistical tools manipulated by competent people.

These companies must also strive to be as transparent as possible about these biases, for example, by publishing public reports such as the "Bias Whitepaper" that Onfido published in 2022. These reports should be based on real production data and not on synthetic or test data.

Public benchmarking tools such as the NIST FRVT (Face Recognition Vendor Test) also produce bias analyses that can be exploited by these companies to communicate about their bias and reduce this bias in their systems.

Based on these observations, companies can understand where biases are most likely to occur in the customer journey and work to find a solution, often by training the algorithms with more complete datasets to produce fairer results. This step lays the foundation for rigorous bias treatment and increases the value of the algorithm and its user journey.

Pillar 2: beware of hidden variables and hasty conclusions

The bias of an AI system is often hidden in multiple correlated variables. Let’s take the example of facial recognition between biometrics and identity documents ("face matching"). This step is key in the user’s identity verification.

A first analysis shows that the performance of this recognition is less good for people with dark skin colour than for an average person. It is tempting in these conditions to conclude that, by design, the system penalises people with dark skin.

However, by pushing the analysis further, we observe that the proportion of people with dark skin is higher in African countries than in the rest of the world. Moreover, these African countries use, on average, identity documents of lower quality than those observed in the rest of the world.

This decrease in document quality explains most of the relatively poor performance of facial recognition. Indeed, if we measure the performance of facial recognition for people with dark skin, restricting ourselves to European countries that use higher-quality documents, we find that the bias practically disappears.

In statistical language, we say that the variables "document quality" and "country of origin" are confounding with respect to the variable "skin colour."

We provide this example not to convince that algorithms are not biased (they are) but to emphasise that bias measurement is complex and prone to hasty but incorrect conclusions.

It is therefore crucial to conduct a comprehensive bias analysis and study all the hidden variables that may influence the bias.

Pillar 3: design rigorous training methods

The training phase of an AI model offers the best opportunity to reduce its biases. It is indeed difficult to compensate for this bias afterward without resorting to ad-hoc methods that are not robust.

The datasets used for learning are the main lever that allows us to influence learning. By correcting the imbalances in the datasets, we can significantly influence the behaviour of the model.

Let’s take an example. Some online services may be used more frequently by a person of a given gender. If we train a model on a uniform sample of the production data, this model will probably behave more robustly on the majority gender, to the detriment of the minority gender, which will see the model behave more randomly.

We can correct this bias by sampling the data of each gender equally. This will probably result in a relative reduction in performance for the majority gender, but to the benefit of the minority gender. For a critical service (such as an application acceptance service for higher education), this balancing of the data makes perfect sense and is easy to implement.

Online identity verification is often associated with critical services. This verification, which often involves biometrics, requires the design of robust training methods that reduce biases as much as possible on the variables exposed to biometrics, namely: age, gender, ethnicity, and country of origin.

Finally, collaboration with regulators, such as the Information Commissioner’s Office (ICO), allows us to step back and think strategically about reducing biases in models. In 2019, Onfido worked with the ICO to reduce biases in its facial recognition software, which led Onfido to drastically reduce the performance gaps of its biometric system for age and geographic groups.

Pillar 4: Adapt the solution to the use case

![]() There is no single measure of bias. In its glossary on model fairness, Google identifies at least three different definitions for fairness, each of which is valid in its own way but leads to very different model behaviours.

There is no single measure of bias. In its glossary on model fairness, Google identifies at least three different definitions for fairness, each of which is valid in its own way but leads to very different model behaviours.

How, for example, to choose between "forced" demographic parity and equal opportunity, which takes into account the variables specific to each group?

There is no single answer to this question. Each use case requires its own reflection on the field of application. In the case of identity verification, for example, Onfido uses the "normalised rejection rate" which involves measuring the rejection rate by the system for each group and comparing it to the overall population. A rate greater than 1 corresponds to an over-rejection of the group, while a rate less than 1 corresponds to an under-rejection of the group.

In an ideal world, this normalised rejection rate would be 1 for all groups. In practice, this is not the case for at least two reasons: first, because the datasets necessary to achieve this objective are not necessarily available; and second, because certain confounding variables are not within Onfido’s control (this is the case, for example, with the quality of identity documents mentioned in the example above).

Perfection is the enemy of progress

Bias cannot be completely eliminated. In this context, the important thing is to measure the bias, continuously reduce this bias, and communicate openly about the limitations of the system.

Research on bias is largely open. Numerous publications are available on the subject. Large companies like Google and Meta actively contribute to this knowledge by publishing not only in-depth technical articles but also accessible articles and training materials, as well as datasets dedicated to the analysis of bias. In 2023, Meta published the Conversational Dataset, a dataset dedicated to the analysis of bias in models. Onfido also contributes to the common effort with open-source publications and significant research efforts on bias.

As AI developers continue to innovate and applications evolve, biases will continue to manifest. This should in no way discourage organisations from implementing these new technologies, as they promise to play a decisive role in improving their digital offerings.

If companies have taken the appropriate measures to mitigate the impact of these biases, customers’ digital experiences will continue to improve. They will be able to access the right services, adapt to new technologies, and get the support they need from the companies they want to interact with.

Olivier Koch is VP of Applied AI at Onfido

Main image courtesy of iStockPhoto.com and designer491

Business Reporter Team

Most Viewed

23-29 Hendon Lane, London, N3 1RT

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2024, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543